- FuriosaAI pursuing $300 million to $500 million Series D round, its final private push before a projected 2027 IPO.

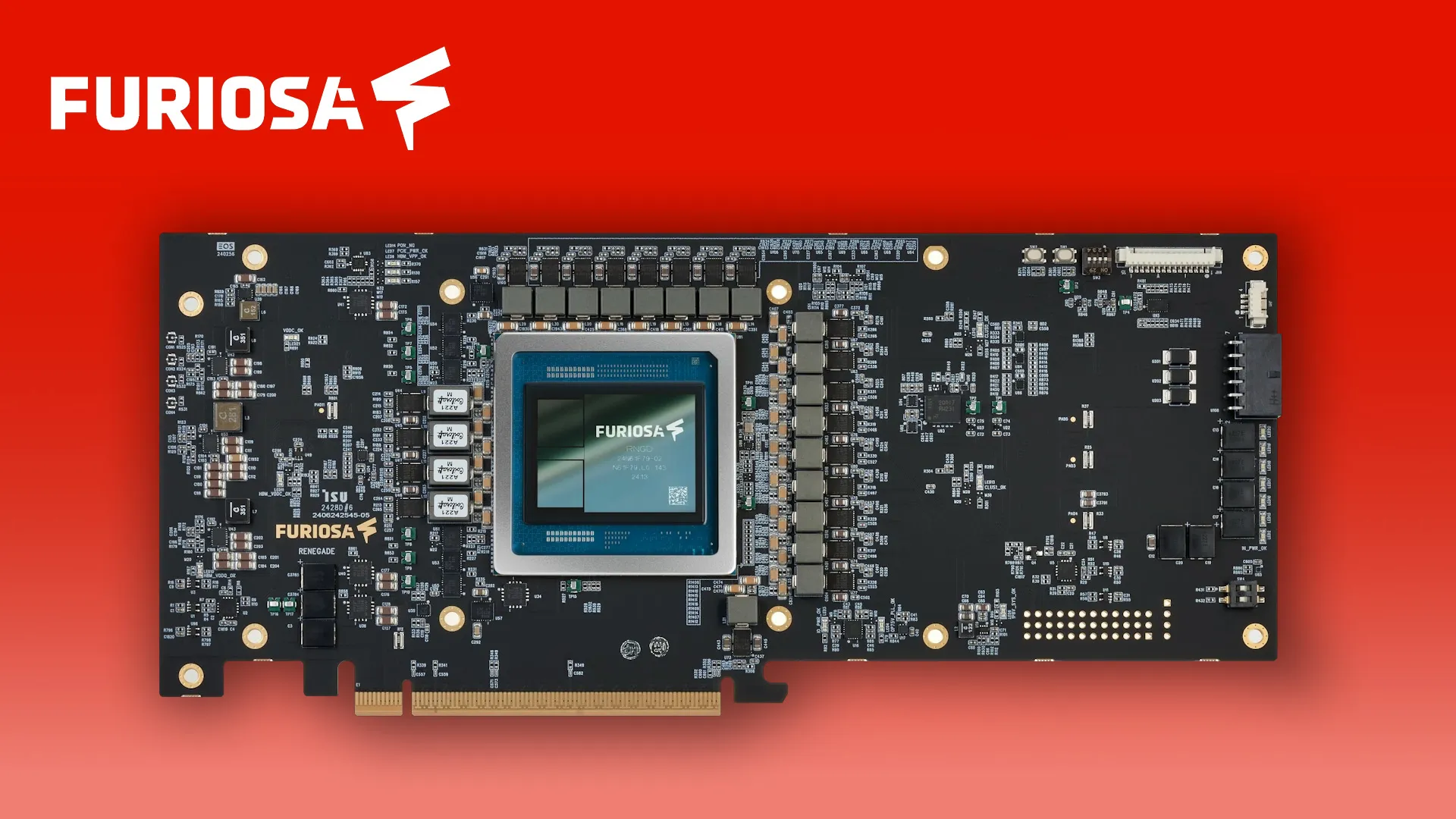

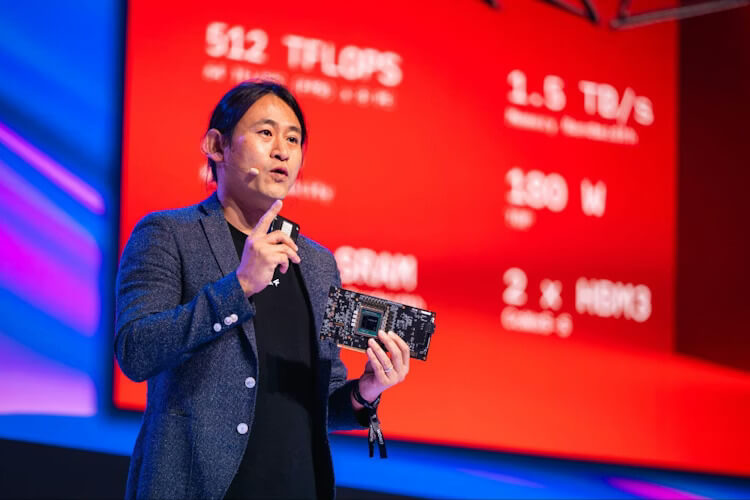

- The specification of the 2nd gen RNGD AI Chip

- The company turned down an $800 million buyout from Meta.

- The company founders and background

In the high-stakes arena of AI semiconductors, most startups dream of a "Big Tech" exit. But FuriosaAI, the South Korean powerhouse founded in 2017, is taking the road less traveled and far more expensive.

Currently, the company is making waves as it hunts for a Series D investment of $300 million to $500 million. With Morgan Stanley and Mirae Asset Securities as its advisors, this final private push is the bridge to a projected 2027 IPO. But why is this "NVIDIA Challenger" asking for so much now, and why did they walk away from a near-billion-dollar payday.

The Last Financial Climb Before IPO: Why Series D?

To understand the current $500 million goal, we have to look at the massive capital required to fight a war on the silicon front lines. FuriosaAI's funding history reads like a masterclass in scaling:

- Series A (2019): Raised $7 million to prove their architectural concept.

- Series B (2021): Secured $70 million, leading to the birth of their first-gen chip, Warboy.

- Series C (2025): Raised $125 million to finalize the 2nd-gen RNGD design.

The "Why" behind Series D: While Series C was enough to sample the chip, mass production is a different war. The company needs this $500 million for 3 critical reasons:

- TSMC Manufacturing: The first commercial batch of the RNGD chips is arriving from TSMC. Scaling to thousands of units requires upfront payments in the hundreds of millions.

- Global Expansion: They are moving beyond Seoul, aggressively hiring in Silicon Valley and Europe.

- 3rd-Gen R&D funds: In the chip war, if you aren't designing the next chip while the selling the current one, you're already losing.

The Gen 2 RNGD

The breakthrough of the RNGD lies in its architecture. Most chips rely on Matrix Multiplication (MatMul) units, which are powerful but "leaky", they often leave compute power sitting idle when processing the complex, irregular shapes of modern AI data.

FuriosaAI’s TCP (Tensor Contraction Processor) architecture treats tensor contraction as a native primitive. This allows the chip to reuse data locally on the silicon far more effectively, cutting down the energy-heavy "trips" data has to take between the chip and the memory

Key Specifications:

- Process Node: TSMC 5nm (Optimized for 1.0 GHz frequency).

- Compute Power: 512 TFLOPS (FP8) / 1024 TOPS (INT4).

- Memory: 48GB of HBM3 with a massive 1.5 TB/s bandwidth.

- On-Chip SRAM: 256MB (Designed to keep the model's "thoughts" local and fast).

- Physical Form: A standard PCIe Gen5 x16 card that plugs into existing air-cooled servers.

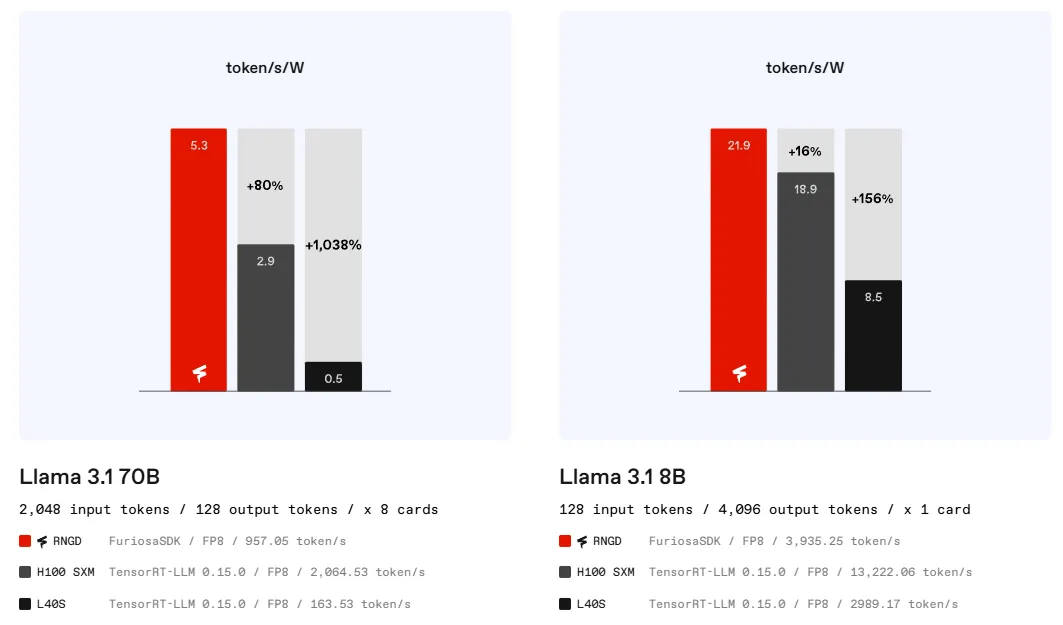

To prove this isn't just a lab experiment, FuriosaAI benchmarked the RNGD against the industry's most popular open-weight model: Meta’s Llama 3.1.

For the Llama 3.1 8B model, a single RNGD card delivers between 2,000 to 3,000 tokens per second (TPS) in multi-user scenarios. Even more impressive is the Llama 3.1 70B, one of the world's most demanding models. While massive GPUs often require complex liquid cooling and 700W of power to run this model effectively, a single RNGD card can handle it with ease, delivering smooth, real-time response speeds (40–60 TPS for single users) while staying within a slim 150W to 180W power envelope.

RNGD vs. NVIDIA: A Battle of Efficiency

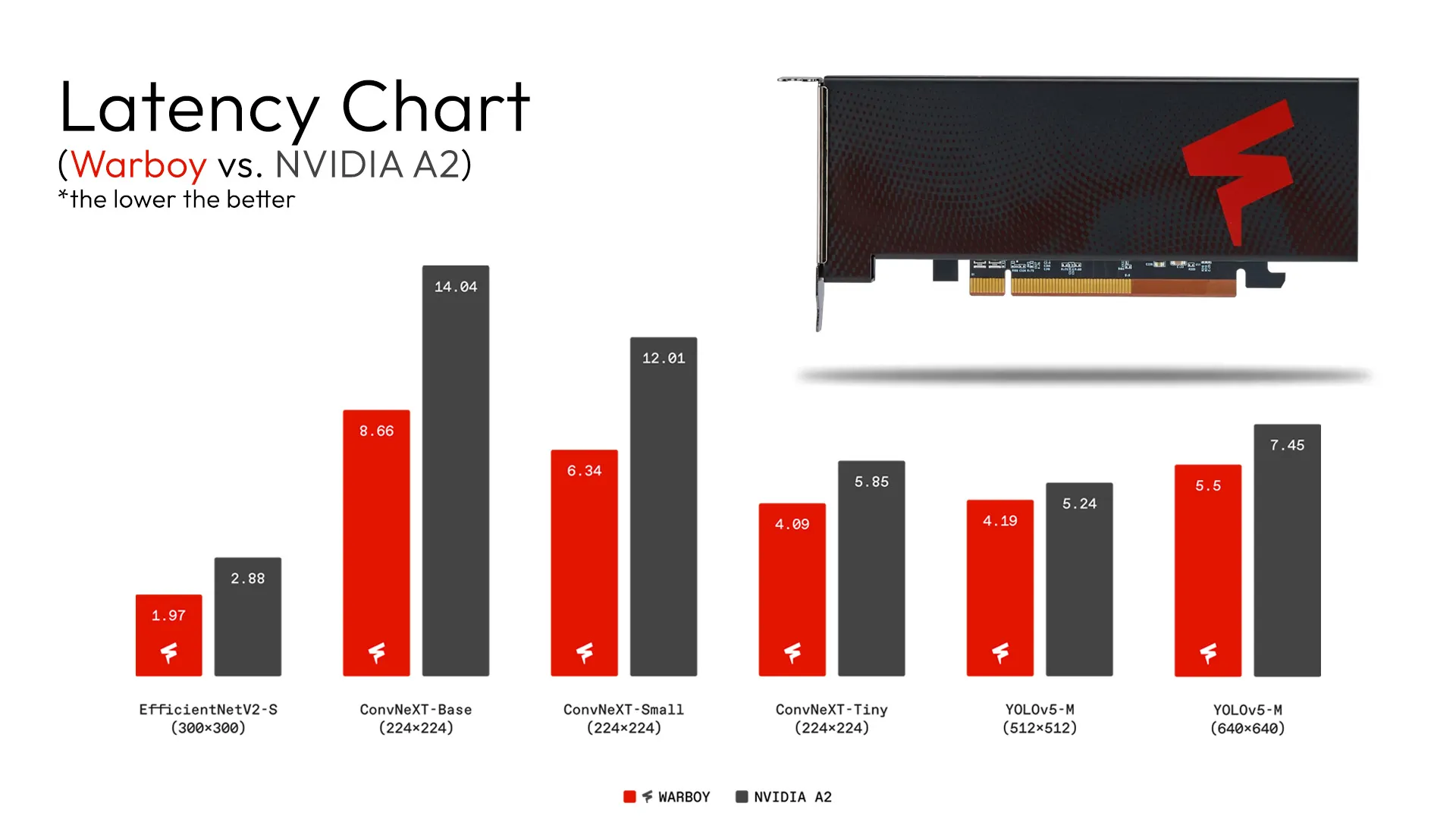

While NVIDIA’s H100 and the newer Blackwell (B200) are the undisputed kings of raw "brute force" training, FuriosaAI has designed the RNGD specifically for the inference phase—the stage where a model is actually being used by customers.

The comparison isn't just about speed; it’s about Power-to-Performance ratios:

The "Renegade" Edge: NVIDIA GPUs are like high-performance drag racers, an unbeatable in a sprint (training), but they consume massive amounts of fuel and require specialized cooling. The RNGD is like a Tesla: designed to do the same daily commute (inference) with a fraction of the energy. For a data center owner, choosing RNGD isn't just a tech upgrade; it’s a massive reduction in electricity and cooling costs.

The Billion-Dollar "No" to Meta

In March 2025, Meta (Facebook) came knocking with an $800 million acquisition offer. For a startup valued at roughly $545 million at the time, this was a massive premium.

The Reason for the Rejection: Meta wanted to turn FuriosaAI into an in-house specialized shop to build custom silicon for Instagram’s recommendation engines and Facebook’s LLMs. CEO June Paik and his team chose independence instead. They believe their architecture is a "universal" solution that can serve the entire global market, not just one social media giant. They didn't want to be a department; they wanted to be the standard.

Real-World Proof: The EXONE Partnership

This isn't just theoretical. The RNGD chip is already proving its worth through major industrial partnerships:

- LG AI Research (EXONE):

LG put the RNGD through seven months of grueling tests. The result? The chip ran LG’s EXONE 3.5 foundation models with 2.25x more power efficiency than top-tier GPUs. LG was so impressed that they are now offering "RNGD Servers" as a turnkey solution for their own enterprise clients. - Hugging Face:

The "GitHub of AI" has partnered with FuriosaAI to optimize its entire Transformers library for the RNGD. This ensures that any developer can run massive models like Llama 3.1 (8B and 70B) with nearly zero latency. - Enterprise RAG:

Companies are already using RNGD to power Retrieval-Augmented Generation (RAG) systems—essentially internal "corporate brains" that can search through millions of legal or technical documents in seconds.

The Founders: A Team of "Misfits"

The audacity to reject Meta comes from the top. Founded in 2017 by June Paik (Baek Jun-ho), the company's origin story is less "Silicon Valley garage" and more "rehab center epiphany."

The "Blessing in Disguise"

June Paik was a veteran engineer at AMD and Samsung Electronics, specializing in memory systems and GPU design. However, the spark for FuriosaAI didn't happen in a lab—it happened while he was bedridden.

In 2014, Paik suffered a torn achilles tendon during a Samsung company soccer match. During the grueling months of recovery, he spent his time taking online AI courses from Stanford University. He realized two things:

- AI was the future of humanity's "metabolism."

- The hardware we were using (standard GPUs) was a "legacy" solution that would eventually hit a "power wall."

Building the Dream Team

He left Samsung with "absolute certainty" that he needed to build a chip from the ground up, not one adapted from gaming. He teamed up with Hanjoon Kim (now CTO), a former Samsung colleague, and a group of "misfits" from Google and Qualcomm who shared a radical belief: a market dominated by a single player isn't a healthy ecosystem.

They adopted the "Blitzscaling" philosophy—making bold, fast moves to solve the energy crisis of AI before the rest of the world even realized it was coming.

The Competitive Chessboard

The "NVIDIA Moat" remains deep, protected by their CUDA software, but FuriosaAI is carving out a distinct niche in sustainable inference. They aren't just fighting the giants like NVIDIA and AMD, or regional rivals like China’s Cambricon; they are fighting the very idea that AI has to be energy-hungry.

As the industry shifts from training models to running them (inference), the winner won't be the chip that uses the most electricity—it will be the "Renegade" that does the most with the least.