- What is KinetIQ? Understand the features and architecture of the software.

- The use of ROBOTERA's XHAND1

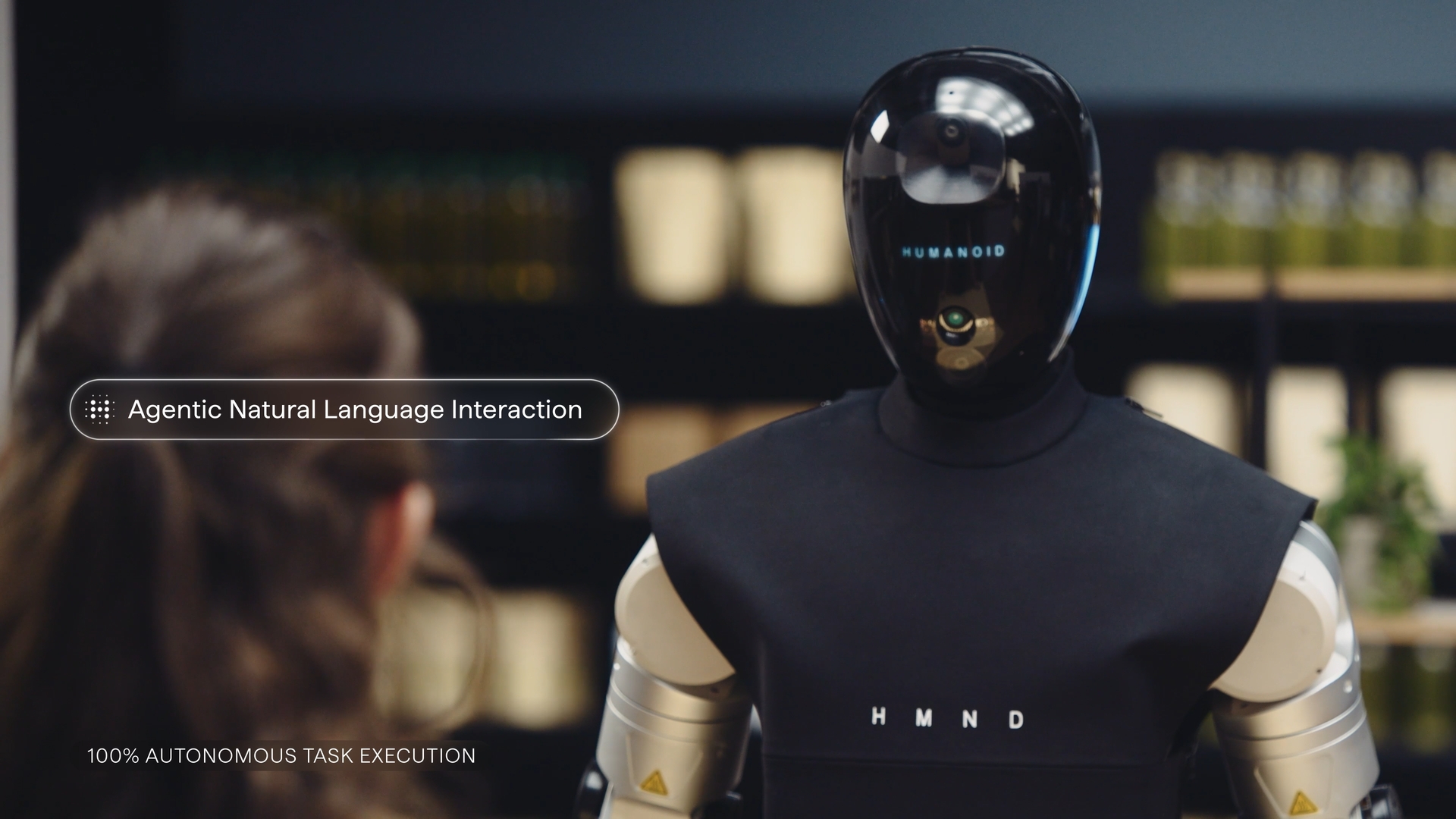

- HMND 01 Alpha's deployment at Siemens and Schaeffler.

UK-based startup Humanoid (SKL Robotics Ltd.) has unveiled KinetIQ, a multi-layered AI software framework designed to be the "central nervous system" for their next generation of bipedal workers. While the industry has spent years perfecting how a single robot moves, KinetIQ shifts the focus to how a global fleet of robots thinks, adapts, and coordinates.

The Architecture of Intelligence: A Cross-Timescale Framework

KinetIQ is defined by its cross-timescale architecture. It doesn't just run a single loop; it operates across four simultaneous cognitive layers, each with its own decision-making frequency and planning horizon. In this "agentic" pattern, each layer treats the one below it as a tool to be prompted and orchestrated.

- System 3:

The Fleet Agent (Seconds to Minutes): This is the high-level "General." It is an agentic layer that integrates with facility management systems to optimize entire fleets. It handles task requests, coordinates robot swaps, and ensures maximum uptime across logistics and manufacturing. - System 2:

Robot-Level Reasoning (Seconds to Sub-minute): The "Manager." Using an omni-modal language model, it interprets instructions from System 3 and decomposes them into sub-tasks. It monitors progress and, if stuck, can even request human intervention through the fleet layer. - System 1:

VLA-Based Execution (Sub-second / 5-10Hz): The "Worker." This Vision-Language-Action neural network commands specific body parts (hands, torso) to execute low-level objectives like picking, placing, or packing. - System 0:

Whole-Body Control (Milliseconds / 50Hz): The "Reflexes." This RL-trained layer solves for the state of all joints to guarantee dynamic stability. It ensures the robot stays balanced while executing the poses set by System 1.

One of KinetIQ’s most powerful features is its cross-embodiment capability. A single AI model can control robots with completely different morphologies, whether they are the Alpha Wheeled units or the Alpha Bipedal platforms.

Because the framework is unified, data collected on one robot type helps improve performance across the entire fleet. Whether the robot has wheels for back-of-store grocery picking or legs for navigating a home, the "brain" remains consistent.

ROBOTERA XHAND1 Integration

To truly solve Physical AI, the software needs a high-fidelity interface with the world. While KinetIQ provides the "thought," in the video, we can see the HMND 01 Alpha platforms are equipped with the XHAND1 from Robotera to provide the "touch."

- Bionic Dexterity:

With 12 active DoF per hand, ±15°index finger lateral movement, the XHAND1 mirrors human-scale movement for executing complex, dexterous manipulation that simple grippers cannot handle. - Tactile Feedback:

Each fingertip features a 270° tactile array sensor capable of detecting forces as low as 0.05N and one-handed grip as high as 80N. This sensory data feeds directly back into the KinetIQ loop, allowing for real-time adjustments if an object is slippery or fragile. - Industrial Strength:

Despite its sensitivity, the hand supports a palm-up load of up to 25kg (each finger can lift up to 5 kg), matching the heavy-duty requirements.

%20Introducing%20KinetIQ%20_%20Humanoid%E2%80%99s%20AI%20framework%20-%20YouTube%20-%203_05.webp)

Field-Tested Performance: Siemens & Schaeffler

KinetIQ is already proving its commercial scalability. In recent field tests at a Siemens facility, the wheeled Alpha reached a throughput of 60 tote moves per hour—a human-equivalent pace.

The framework's ability to scale is further validated by a 5-year partnership with Schaeffler. This deal aims to deploy hundreds of KinetIQ-powered robots into production lines. By combining HMND’s "Intelligence" with Schaeffler’s world-leading actuators, they are creating a formidable force in the physical AI space.