- What is Reinforcement Learning (RL)?

- How does RL work behind the scene?

- What is Reinforcement Learning with Human Feedback (RLHF)

Imagine a little child, still wobbly on their legs, pulling themselves up for the hundredth time. They take a step, then another, before tumbling down again. What happens next? They don't give up. They get a little hug, a cheer of "Good job!" for that brief moment of balance, or perhaps just the internal satisfaction of making progress. This beautiful mess, trial-and-error journey is the perfect analogy for Reinforcement Learning (RL), the machine learning technique that is teaching AI to master tasks from the simplest to the most complex ones.

The Training Ground: A Cycle of Discovery

Reinforcement Learning is all about teaching an "agent"—a software program or a robot—to make a sequence of decisions in an "environment" to achieve a specific "goal." It's learning to act, not just to predict

Think of our little child learning to walk.

- The Agent: The child, the learner and decision-maker.

- The Environment: The living room floor, with all its obstacles.

- The Goal: To walk across the room.

- The Action: The child tries to stand up, move a leg, or crawl.

- The State: The child's current posture, location, and what they see.

The Training Process: An Iterative Loop

- Observation of the State: The child (agent) observes their current situation—I'm sitting on the floor, the toy is across the room.

- Action Selection (Policy): Based on their current strategy (or policy), the child chooses an action—they try to push off their knees. Initially, this policy is random: they flail, they roll, they take a wobbly step.

- Environment Response and New State: The floor (environment) responds. The child either stays upright or falls. A new state is reached.

- Reward/Punishment Signal: This is the core of RL. A "reward function" defines success.

- Reward (Positive Reinforcement): If the child stays upright for a second or takes a solid step, they might get a clap, a smile, or the internal sense of success. This action is reinforced.

- Punishment (Negative Reinforcement): If the child falls, they might get a bump on the head or a frustrated cry. This tells the agent that action (or sequence of actions) was not optimal.

- Policy Update: The agent uses this reward information to adjust its internal strategy (policy). It learns: "The actions I took just now led to a positive reward, so I should be more likely to try those actions again from a similar state."

This loop of State → Action → Reward → New State → Policy Update repeats millions of times. Just as a child tries and falls countless times before walking, an RL agent trains by simulating years of data in a few hours until it finds the optimal policy to maximize the cumulative rewards over the long term.

The Human Touch: Reinforcement Learning with Human Feedback (RLHF)

While traditional RL defines the "good" actions with a purely mathematical reward function, what happens when the goal is something abstract like "be helpful" or "be-safe", especially for AI language models? This is where Reinforcement Learning with Human Feedback (RLHF) steps in.

RHLS brings human judgements directly into the training loop, moving beyond simple programmed rules.

- Human Feedback Collection: The AI generates several different responses to a prompt. Human reviewers then rank those responses from best to worst based on helpfulness, harmlessness or alignment with human values.

- Reward Model Training: This human-ranked data is then used to train a separate AI model, called the Reward Model. This model learns to predict , for any given AI output, how a human would likely rank it. Essentially, it becomes an automated critic that captures human preferences.

- Policy Optimization: The original agent (the policy) is then fine-tuned using the standard RL loop, but with a crucial difference: the Reward Model replaces the simple, hand-coded re ward function. The AI agent performs an action, and the Reward Model assigns a score. The AI learns to maximize this human-informed score, ensuring its final behavior is aligned with the subtle, nuanced preferences of its human operators.

Conclusion

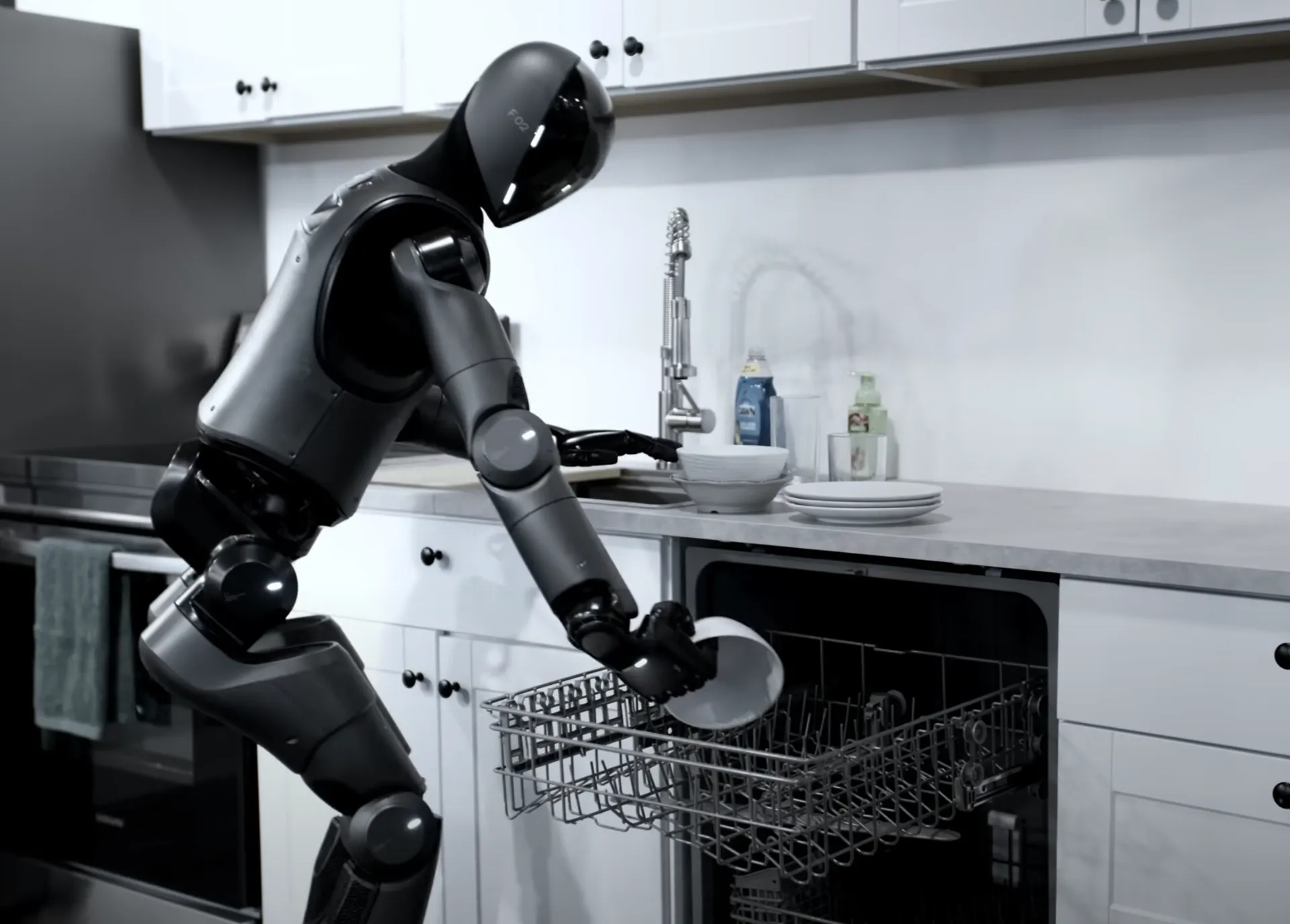

Reinforcement learning is poised to become the bedrock of advanced, adaptive AI systems. The future of RL will be defined by its transition from a specialized research tool to a practical technology for real-world deployment.

We can anticipate breakthroughs in three key areas:

- Efficiency and Generalization: New algorithms, like those in offline and meta-RL, will drastically reduce the need for massive, costly trial-and-error, allowing agents to learn from limited data and quickly adapt knowledge from simulation to reality (sim-to-real transfer).

- Broader Real-World Deployment: RL will expand from games and controlled environments into high-stakes domains like autonomous vehicles, industrial automation, and healthcare. This expansion will be enabled by a greater focus on safety guarantees, using techniques like constrained RL and formal verification to ensure autonomous systems make reliable and safe decisions.

- Tighter AI Integration: The most powerful systems will emerge from integrating RL with other AI forms. Combining RL with large language models will allow agents to follow complex natural language instructions, while multi-agent RL will power collaborative, dynamic systems for everything from supply chain management to smart city traffic control.

In essence, the next era of RL promises a world of highly flexible, continuously learning, and truly autonomous agents—a fundamental leap toward generalized artificial intelligence that can operate with both mastery and safety in the complex, unpredictable real world.