Key Takeaways:

- What is LLMs, VLMs, and LBMs?

- How does it work?

- Understanding these models helps clarify how AI interprets language, vision, and behavior—raising questions about the future of human-AI interaction and ethical boundaries.

If you're keeping up with AI tech news, you’ve probably come across terms like LLM, VLM, or even LBM tossed around. They might sound like confusing acronyms, especially if you’re new to AI or haven’t seen how these models actually work. But worry not—let’s break each one down in simple terms, uncover what makes them tick, and see how they’re shaping the AI you interact with every day. Whether it’s chatting with a chatbot, asking your phone about a photo, or even robots learning how to behave, these models are the invisible brains behind the scenes.

Large Language Models (LLM): The Masters of Words

LLMs (Large Language Models) are trained on massive amounts of text, giving them the power to understand and generate human-like language. Ever had a conversation with a chatbot that feels surprisingly natural? Behind that is often an LLM like GPT-4. It predicts the next word you want to hear, enabling tasks such as writing essays, answering questions, or translating languages.

At the heart of most large language models, such as GPT-4 or BERT, is the transformer architecture. This architecture uses several critical components:

- Tokenization: The input text is split into smaller parts called tokens (words or subwords), converting them to numbers the model can understand.

- Embedding Layers: These convert tokens into dense vector representations capturing semantic meaning.

- Self-Attention Mechanism: This lets the model weigh the importance of each word in the context of others, allowing it to understand sentences holistically rather than word-by-word.

- Multi-Head Attention: Multiple "attention heads" look at different parts of the text simultaneously, capturing various relationships.

- Stacked Transformer Layers: Repeated layers increase the model’s ability to learn complex language patterns.

- Output Layer: Typically a Softmax function, it predicts the most probable next token or fills in missing words.

Think of an LLM when we are reading a novel and trying to predict the next word based on the story so far—LLMs do this on a massive scale using their learned understanding of language from billions of examples.

Vision-Language Models (VLM): The Eyes and Mind Combined

VLMs (Vision-Language Models) take understanding a step further by combining language comprehension with visual recognition. They don’t just read or write words—they can also “see” and describe images.

VLMs combine two AI disciplines: computer vision and Natural Language Processing (NLP). Technically, they consist of:

- Visual Encoder: Processes images by extracting features using Convolutional Neural Networks (CNNs) or Vision Transformers (ViT). It identifies objects, textures, and spatial information.

- Text Encoder: Converts written queries or captions into language embeddings using transformer layers.

- Cross-Modal Alignment Layers: These connect visual and textual data, enabling the model to associate image features with text meaning.

- Decoder or Classification Heads: Generate text descriptions, answer questions about images, or perform tasks like image captioning or visual question answering.

Imagine uploading a photo of your living room and asking, “Where should I place a new lamp?” A VLM analyzes the image, recognizes furniture and lighting conditions, then responds intelligently. This model fuses computer vision (recognizing objects and scenes) with language generation, creating AI that can converse naturally about what it sees.

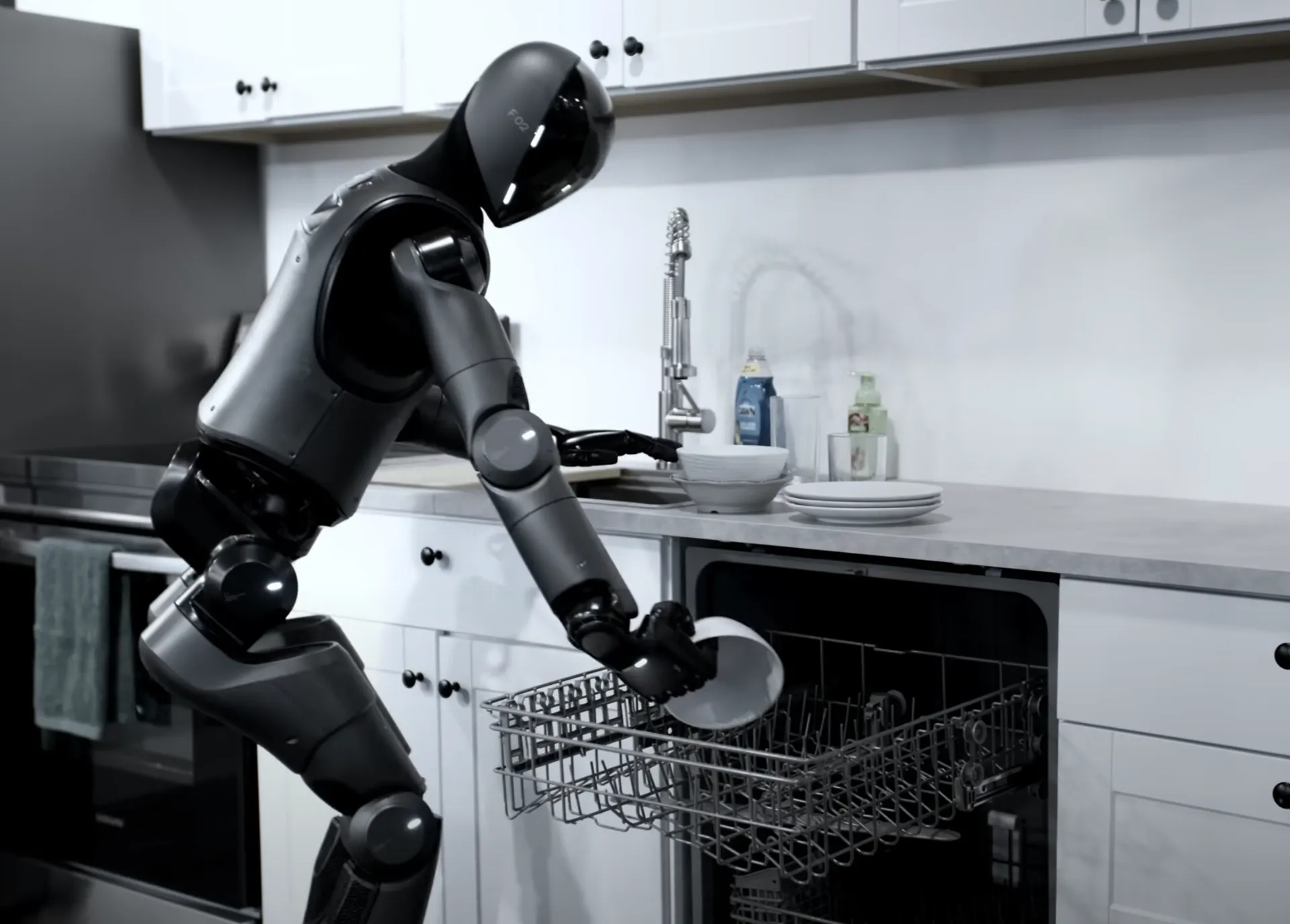

Large Behavior Models (LBM): The Predictors of Action

Unlike LLMs and VLMs, which excel at language and images, LBMs (Large Behavior Models) focus on understanding and simulating human behavior. They learn patterns of actions and decision-making, then predict what might happen next or suggest optimal behaviors.

LBMs focus on complex human behaviors and sequential decision-making, underpinning AI that learns and predicts actions over time. Key technologies include:

- Reinforcement Learning: Models learn which actions yield the best results through trial and error, receiving rewards or penalties.

- Markov Decision Processes (MDP): Mathematical frameworks that model decision-making in uncertain environments.

- Sequence Modeling: Utilizing transformers or recurrent architectures to predict future behaviors based on past sequences.

- Simulation and Real-World Data: LBMs train on large datasets of human or agent behavior to generalize patterns.

Picture a self-driving car navigating busy streets. A LBM helps it interpret the behavior of pedestrians, other drivers, and traffic signals, allowing it to predict movements and react safely. Or think of AI in gaming that controls characters who adapt and respond to player actions in complex, human-like ways.

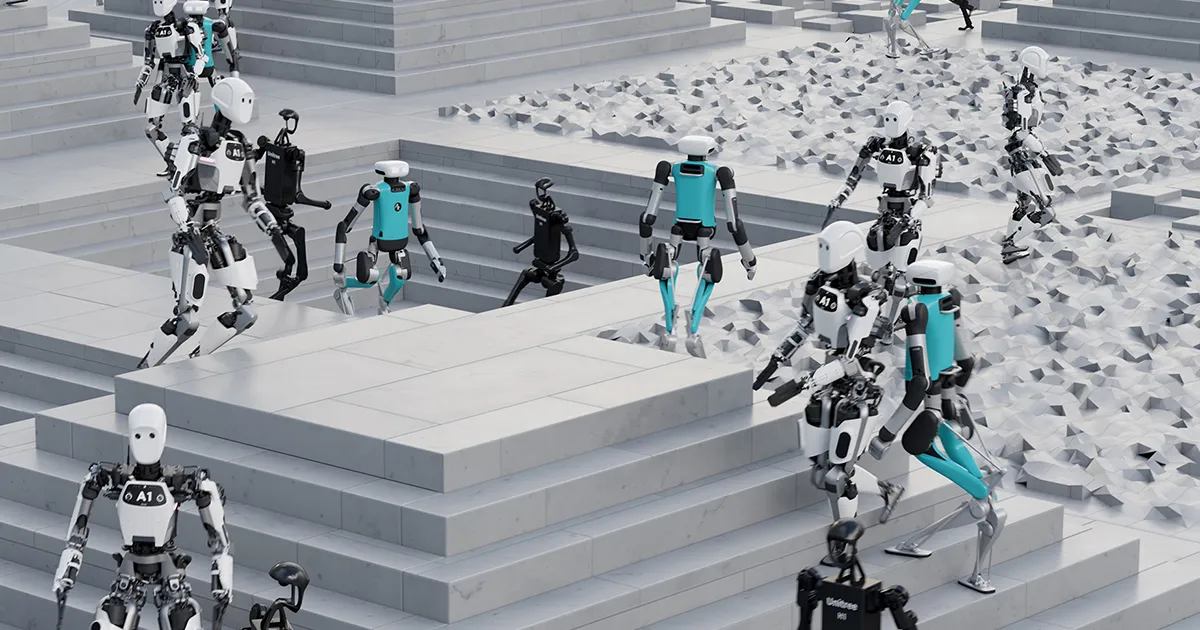

The AI Model Symphony: Working Together

These three models form a dynamic team: LLMs craft language and conversation, VLMs bridge sight and speech, and LBMs predict and guide behaviors. This synergy powers new applications—from virtual assistants that see and talk, to robots that act and adapt in real time.

Could this blend of language, vision, and behavior present a future where AI understands us as intuitively as our closest friends? Or does it risk blurring lines that make human interaction special?

.webp)