Intro

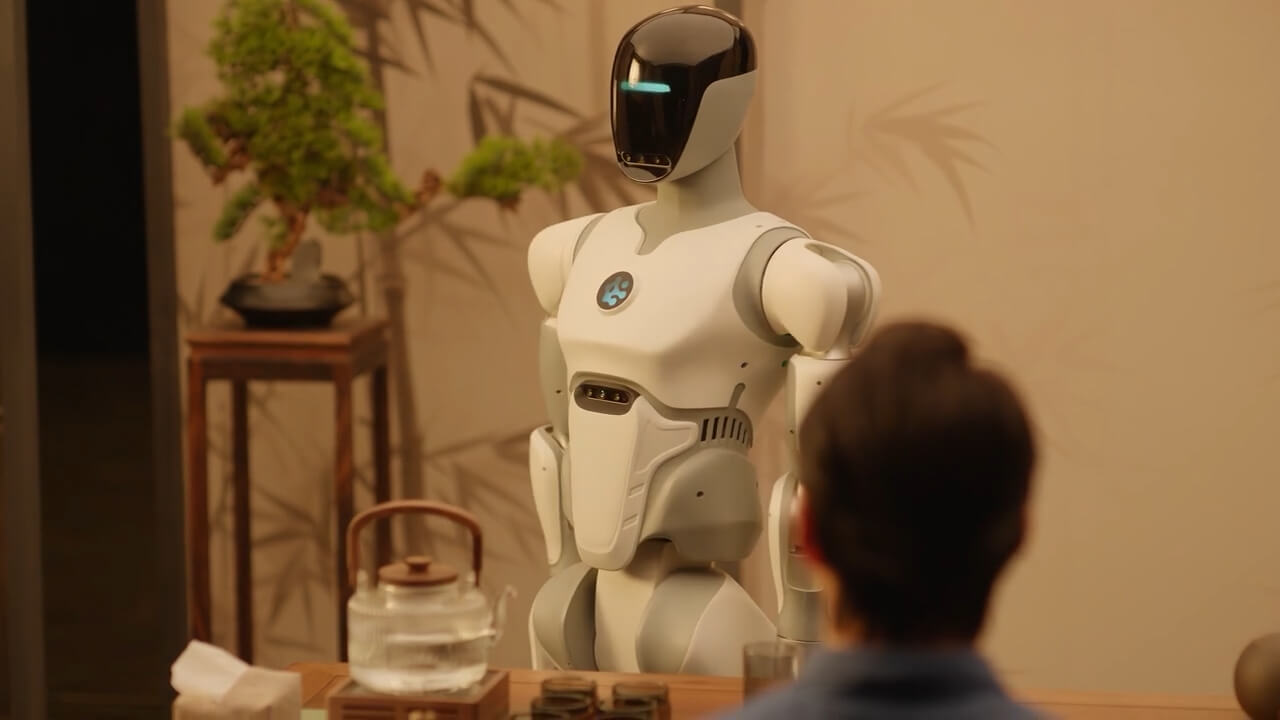

AlphaBot 2 features a humanoid form with 34 degrees of freedom, including arms with a 700 mm span and an extended reach up to 240 cm. Its waist and legs incorporate a lifting and tilting mechanism allowing a 0–2.4 meter operational height range. The robot is equipped with a sophisticated force feedback system enabling “rigid yet compliant” manipulation. AlphaBot 2’s AI platform, AlphaBrain, integrates vision, language, and action for real-time environment perception and task planning. It interprets human facial expressions to infer intentions and applies deep semantic understanding to translate abstract commands into precise physical actions. The robot’s hardware and software support rapid adaptation to new environments without manual reprogramming, making it suitable for both desktop and industrial-scale applications.