Key Takeaways:

- Humanoid and quadrupedal robots rely on a rich variety of sensors—from cameras and LiDAR to IMUs and tactile sensors

- What is SLAM (Simultaneous Localization and Mapping)?

- What is IMUs (Inertia Measurement Units)?

- Understanding how these sensors mimic human senses reveals how robots perceive the world—and points to a future where robots may see, feel, and move as seamlessly as humans.

Ever wonder how a robot sees the world? In our recent article, we explored the “brain” of the robot—the powerful AI models like Large Language Models (LLM) and Vision-Language Models (VLM) that enable robots to think, understand language, and interpret what they see. These AI models act as the cognitive center, processing information and making decisions. Now, it’s time to look at the other vital part of the robotics puzzle: the sensors. Just as the human body relies on eyes, ears, skin, and muscles to navigate the world, robots depend on a complex array of sensors to perceive their environment and make sense of it. Let’s dive into the fascinating world of sensors in humanoid and quadrupedal robots, discovering how they allow machines to see, feel, balance, and move—much like we do.

The Many Eyes and Ears of Robots: Sensors in Robotics

Humanoid and quadrupedal robots come equipped with dozens of sensors that serve as their "senses." The robotics industry uses many types, including:

- LiDAR (Light Detection and Ranging): Think of this as a robot’s eyes, scanning the environment with laser pulses to create a 3D map of obstacles and spaces.

- Cameras: Like human eyes, cameras capture detailed images and videos for object recognition.

- IMUs (Inertial Measurement Units): These are the robot’s inner ears and muscles, it understands gravity, able to sense acceleration, orientation, and balance to keep it steady while walking or running.

Understanding SLAM: How Robots Map and Navigate Their World

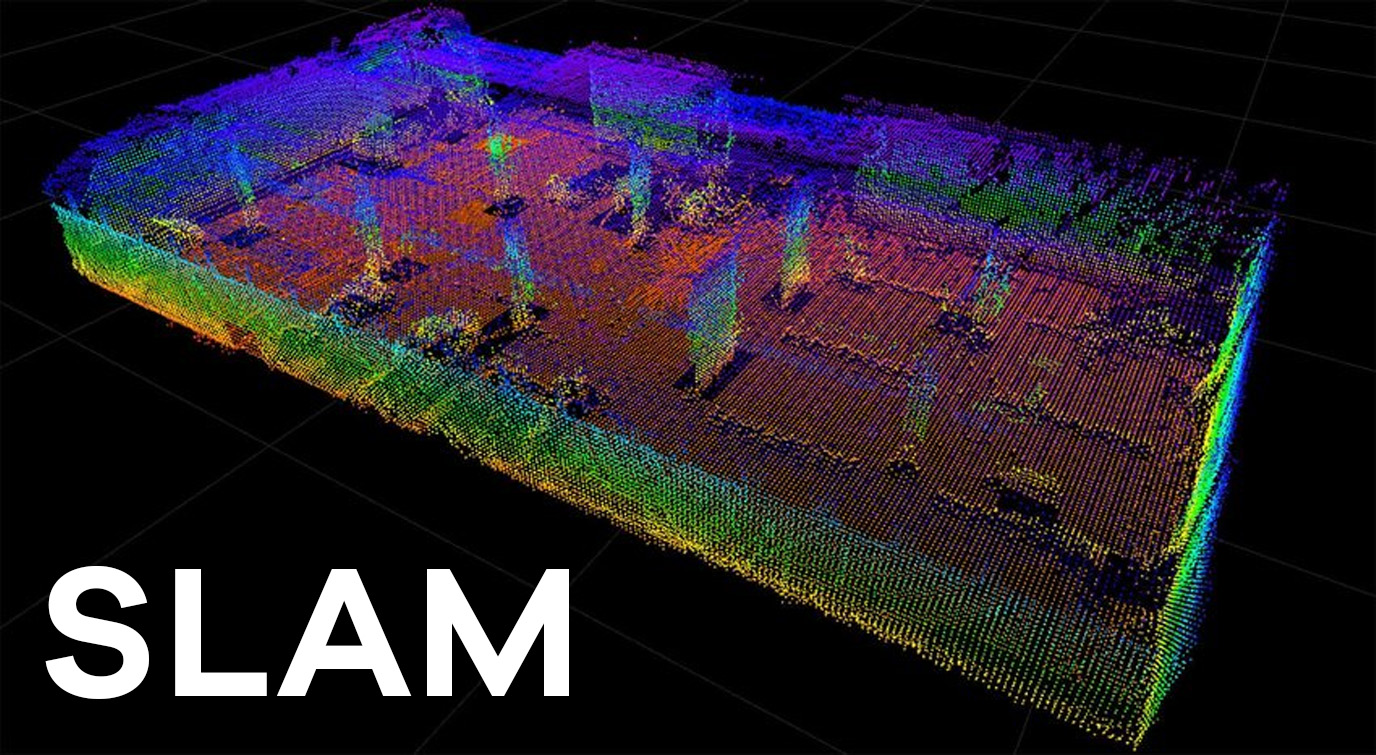

SLAM stands for Simultaneous Localization and Mapping. It’s a key technology that allows robots to build a map of an unknown environment while simultaneously figuring out where they are within that map—much like how we learn and remember a new room by walking around and taking note of landmarks.

There are three popular categories of SLAM, each using different sensors and approaches:

- Visual SLAM (vSLAM):

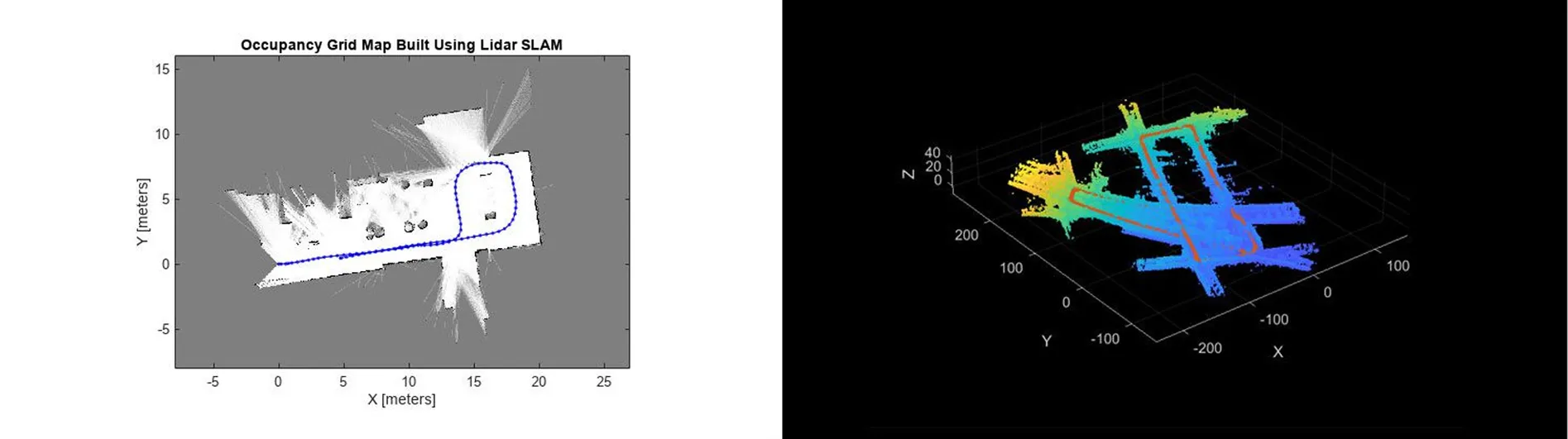

This uses cameras (monocular, stereo, RGB-D) to capture images and detect visual features such as edges, corners, and objects. The robot uses these landmarks to localize and map its environment. - LiDAR SLAM:

Here, the robot employs LiDAR sensors, which send laser pulses and measure the time it takes for the beams to bounce back, creating a precise 3D point cloud map. LiDAR SLAM is especially effective in outdoor or low-light environments where cameras might struggle.

- Multi-Sensor SLAM:

This approach combines data from multiple sensor types—such as cameras, LiDAR, and inertial measurement units (IMUs)—to enhance mapping accuracy and localization robustness. The fusion of data helps overcome limitations of individual sensors.

Each SLAM method involves complex algorithms that process sensor data, detect landmarks or features, and optimize the robot’s position estimate while continuously updating the map. These technologies are what enable modern robots to move autonomously and safely in unfamiliar and dynamic environments.

Sensor Counts and Market Growth

Leading humanoid robots often combine dozens of sensors—such as 28 joint torque sensors, multiple 6-degree-of-freedom force sensors, several cameras, and IMUs—each critical for different tasks. The sensor market for robotics is booming, expected to exceed $10 billion by 2035, driven by demand for higher dexterity, perception, and safety.

Why These Sensors Matter

Without these "nervous system" components, robots couldn't perform delicate tasks, maintain balance, or understand their environment. They are fundamental to allowing robots to operate safely alongside humans and adapt in real-world scenarios. Although humanoid and quadrupedal robots are still not moving as smoothly or naturally as humans, their sensor technology and AI integration continue to improve rapidly. With ongoing advancements, their agility, balance, and responsiveness are expected to get significantly better over time, bringing them closer to fluid and instinctive motion.