- Many people are growing frustrated with AI due to unreliable and nonsensical outputs seen online and in professional settings.

- The article argues that the fault lies not with the AI technology itself, but with users who utilize it without diligence, fact-checking, or critical review.

- A key problem is the "copy-paste" mentality, where users accept AI-generated content as-is, leading to errors and professional irresponsibility, as seen in a real-world legal case.

- AI should be viewed as a powerful assistant or "super-intern" that complements human skills, speeding up workflows and handling grunt work, but always requiring human supervision.

- Effective use of AI requires skillful prompting and a mandatory final review process, where the human expert applies their knowledge, context, and ethical judgment.

- The ultimate responsibility for any output, good or bad, rests with the human user, not the AI tool.

You’ve probably seen it, an AI-generated image with a person sporting a stylish third arm. A product description that sounds like it was written by a space alien who just learned English from a 1950s vacuum cleaner manual. Or my personal favorite, an AI-powered chatbot assuring a customer that, yes, they can absolutely get a full refund for a car they bought three years ago. The internet is littered with these digital face-palms, leading a sizable chunk of the population to declare, "AI is stupid!"

But here’s a hot take for you: The AI isn’t the problem. The problem is the person holding the keyboard, treating a complex neural network like a magic lamp that grants wishes without any effort. It’s time we stopped blaming the tool and started looking at the person wielding it. Don't hate the AI; hate the person behind it.

The "Copy-Paste-And-Pray" Epidemic

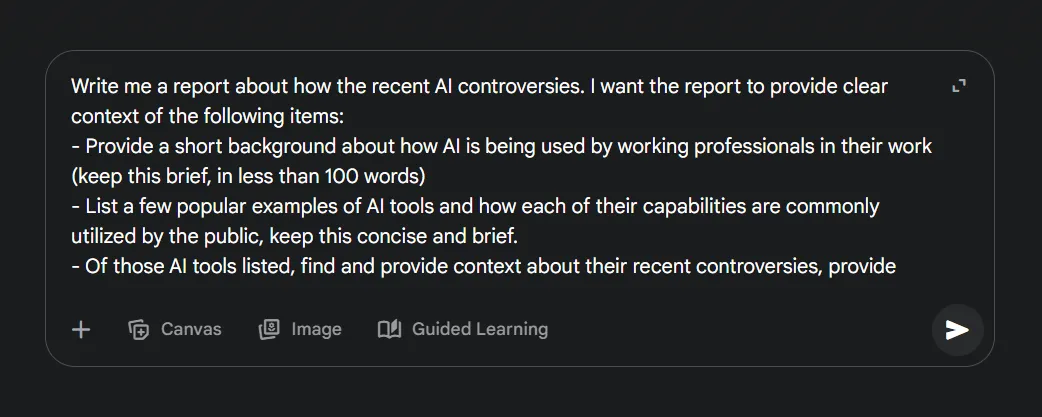

Artificial Intelligence, particularly Large Language Models (LLMs) like ChatGPT, Gemini, and their brethren, are now woven into the fabric of our digital lives. They’re helping to write code, draft emails, summarize meetings, and even create art. They are, essentially, everywhere. The issue is that they’ve been adopted with the same level of care and diligence as a teenager downloading a new social media app.

We're witnessing a global epidemic of what I call the "Copy-Paste-and-Pray" methodology. A user types a vague, one-sentence prompt, gets a wall of text back, copies it verbatim, pastes it into a report, a legal brief, or a client proposal, and then prays to the digital gods that nobody notices it’s a complete nonsense.

This is not just sloppy; it's professionally irresponsible. A prime example is the now-infamous case of the New York lawyer who used ChatGPT for legal research. The AI, in its infinite and occasionally hallucinatory wisdom, invented several completely fake legal precedents. The lawyer submitted these fictitious cases to the court, resulting in professional embarrassment and sanctions (Mata v. Avianca, Inc., 2023). He didn't blame his faulty research methods; he blamed the AI for not being a licensed attorney. That’s like blaming your oven because you didn’t know how to bake.

The core of the problem is this: these AIs are designed to be convincing, not necessarily correct. They are masters of statistical pattern recognition. They predict the next most likely word in a sequence based on the mountains of data they were trained on. They don't "know" things or "understand" context in the human sense. Asking an LLM for factual information without verifying it is like asking a parrot that’s memorized Wikipedia to give you a lecture on quantum physics. It might sound plausible, but the substance could be entirely hollow.

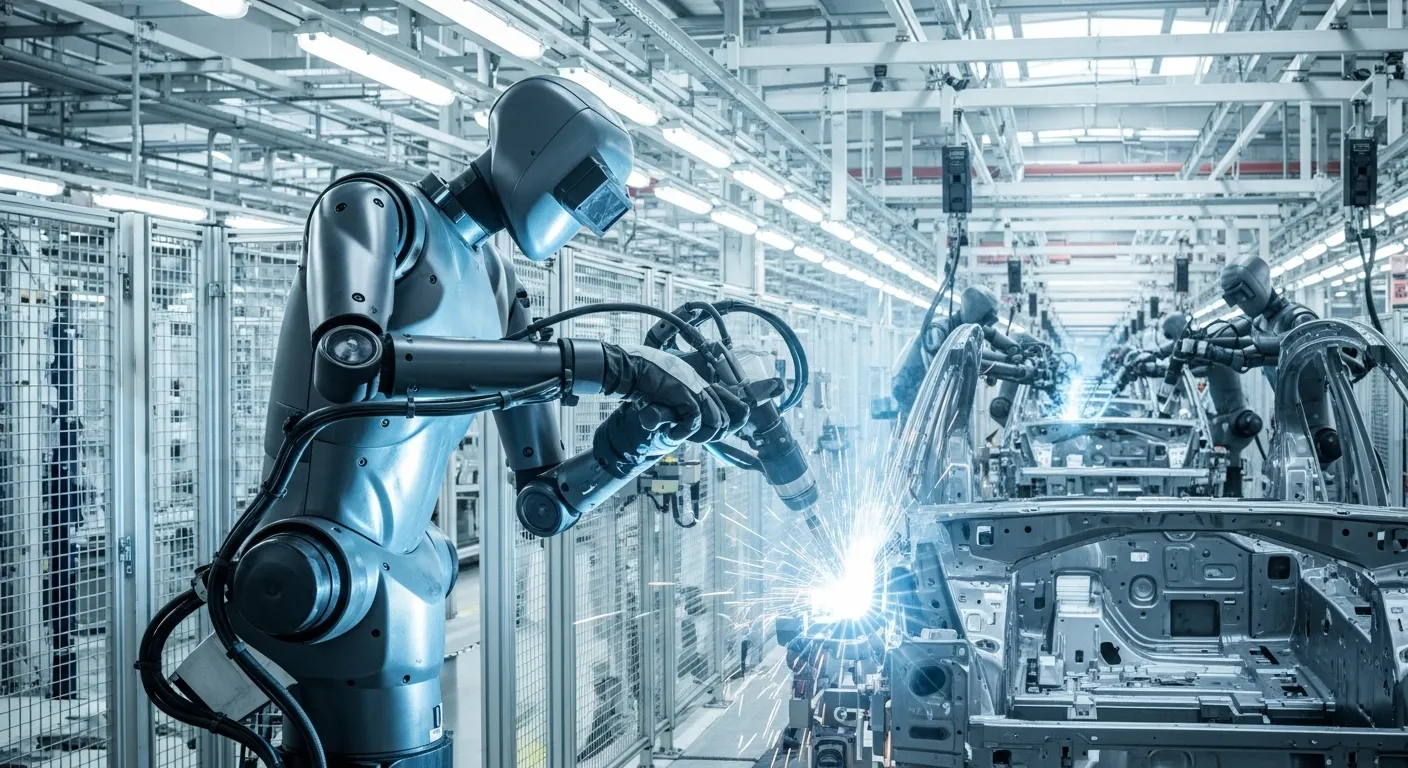

The AI Is Your Super-Intern, Not Your Replacement

So, if AI can be a fountain of eloquent nonsense, why bother? Because when used with a modicum of intelligence, it is arguably the most powerful productivity tool ever created. The trick is to change your mindset. Don't think of AI as an oracle or an automated expert who will do your job for you.

Think of it as the most brilliant, fastest, and slightly-too-eager-to-please intern you've ever had.

Your super-intern can:

- Draft a report in 30 seconds.

- Brainstorm 50 marketing slogans in the time it takes you to sip your coffee.

- Write boilerplate code, freeing you up to tackle the complex architectural problems.

- Summarize a 100-page document into key bullet points.

But, like any intern, its work requires supervision. You wouldn't let a first-year intern submit a final project proposal to your biggest client without reviewing it, would you? Of course not. You’d take their draft, thank them for the great starting point, and then apply your own expertise, experience, and critical thinking to refine it, fact-check it, and add the crucial human touch.

That’s precisely how AI should be used. It’s a force multiplier for your own skills, not a substitute for them. It automates the grunt work, allowing you to focus on strategy, creativity, and final execution. It’s there to complement you, not replace you.

The Human Touch: Prompt, Review, Refine

Getting good results from AI is a skill. Vague prompts get vague and generic answers. The art of "prompt engineering"—crafting detailed, context-rich instructions for the AI, is what separates a useful output from a useless one. But even with the perfect prompt, the job is only half done.

The final, non-negotiable step is always human review. This is where you check the facts, correct the tone, and inject nuance. Does the output align with your company's values? Is the legal advice sound? Is the medical information accurate? The AI can’t make those judgments. You can.

A powerful tool in the hands of a fool is just a faster way to create a disaster.

In short, blame not the AI, but the human behind it for lousy outputs. A powerful tool in the hands of a fool is just a faster way to create a disaster. A calculator won't make you a mathematician, and a word processor won't make you a novelist. Similarly, an AI won't make you a competent professional. It will, however, make a competent professional faster, more efficient, and more creative than ever before.

The next time you see an AI-generated blunder, stifle your laugh (or don't) and remember: a human being likely approved that message. The AI was just following orders. Lousy decisions produce lousy results.