- A recap of what is SLAM, and the key components such as the LiDAR camera and IMU

- What are the cons of a LiDAR camera? Why is it important?

- The alternatives for LiDAR

- Security concerns and environmental variables that might affect the cameras

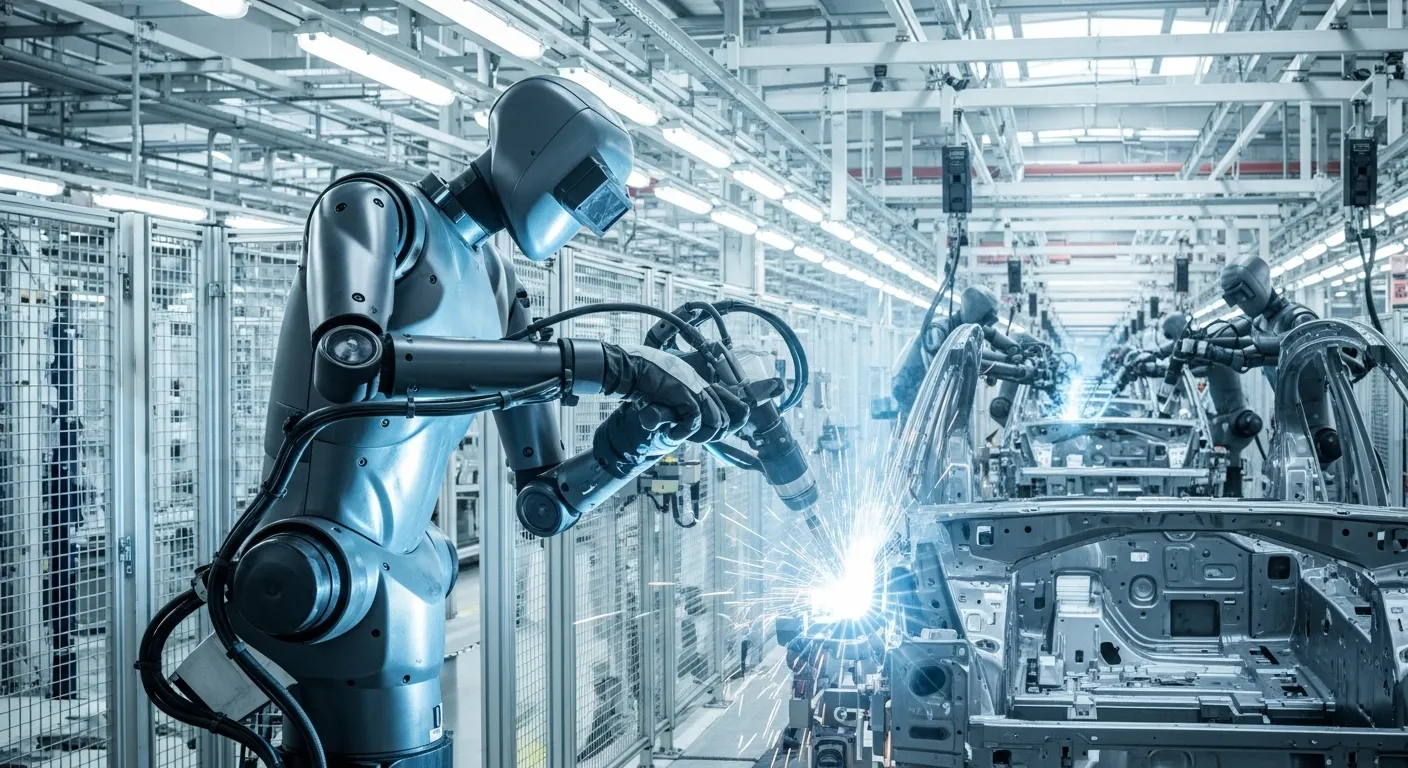

Have you ever noticed a curious trend in the latest generation of advanced humanoid and mobile robots? Take a look at some platforms like the Unitree G1, Digit, Astribot S1, or the Booster T1. Whether its mounted prominently on the robot's head or placed squarely on its chest, one piece of hardware seems to be universally present, the spinning or solid-state block of a LiDAR unit.

It's the most recognizable symbol of autonomy, but why is it everywhere?

In one of our previous article, we explored the incredible power of SLAM (Simultaneous Localization and Mapping)—the technological cornerstone that allows autonomous robots, from warehouse AMRs to self-driving cars, to build a map of their surroundings while simultaneously figuring out exactly where they are within it.

But for SLAM to work its magic, the robot needs "eyes" for data input. And not just one set! The sensory system is the lifeblood of an autonomous platform.

The Usual Suspects in Robot Vision

Robotics companies often relies on a sophisticated cocktail of vision sensors and hardware to give their machines spatial awareness. If you open the hood of a typical mobile robot, you will mostly find a sensor array composed of three main players:

- LiDAR ( Light Detection and Ranging): A laser-based sensor that shoots out pulses of light and measures the time it takes for them to return or bounce back. This is the gold standard for collecting a dense, precise 3D map of the environment, known as the point cloud.

- IMU (Inertial Measuring Unit): This is the robot's inner ear. It's a small electronic device that measure linear acceleration and angular velocity, typically through a combination of accelerometers and gyroscopes. The IMU is crucial in every robots because it provides high-frequency, short-term data on the robot's movement—indispensable for keeping track of position between less frequent sensor readings.

- RGB Cameras: These are your standard digital cameras, capturing colors and textures. They provide the rich, high-resolution visual data needed for tasks like object recognitions, reading signs, or determining the type of surface beneath the robot.

In an effective SLAM system, these components are fused together. The LiDAR give you the map, the IMU smooths out the odometry, and the camera provides the context.

The Chink in the LiDAR Armor

For years, LiDAR has reigned supreme, especially in outdoor and long-range applications like autonomous vehicles. Its long-range precision and ability to operate independently of ambient light are unmatched.

However, LiDAR is not the flawless sentinel we might imagine. Its imperfections can become critical weaknesses, particularly in challenging environments:

- Environment Obstacles: LiDAR's laser pulses can struggle with certain materials. Highly reflective surfaces, like glass or polished metal, can confuse the sensor, causing the laser to scatter and return bad distance readings, Conversely, dark or highly absorptive surfaces may not reflect enough light back, creating a "blind spot" where the robot sees nothing.

- Adverse Weather: Rain, snow, and dense fog can all scatter the laser beam, severely degrading the quality of the point cloud data and leading to false positives or missed objects.

"LiDAR can be ineffective during heavy rain or low-hanging clouds. Because the laser pulses are based on reflection, LiDAR does not work well in areas or situations with high sun angles or large reflections.

- RoboticsBiz

- Cost and Complexity: High-resolution, long-range LiDAR units are often expensive and involve complex, moving mechanical parts, which increases their size, power consumption, and risk of mechanical failure.

- Security Vulnerabilities: As researchers have recently demonstrated, LiDAR systems can be susceptible to malicious laser attacks, where external lasers can be used to "spoof" the sensor into seeing objects that don't exist, an illusion to robot, or conversely, making real objects vanish.

"The findings in this paper unveil unprecedentedly strong attack capabilities on LiDAR sensors, which can allow direct spoofing of fake cars and pedestrians and the vanishing of real cars in the AV's eye. These can be used to directly trigger various unsafe AV driving behaviors such as emergency brakes and front collisions."

- Dr. Qi Alfred Chen, UC Irvine Assistant Professor of Computer Science

The New Guard: A Symphony of Vision Alternatives

Because of these limitations—especially for robots working up-close or indoors—the market is rapidly adopting a diverse range of 3D vision sensors that challenge or complement LiDAR. These options often prioritize compactness, cost, and high-quality near-range depth perception.

- Structured Light Cameras (SL Cameras): A projector that emits a known pattern of infrared light on to the scene. A camera then observes how this pattern deforms over the object's surface to calculate a highly detailed depth map via triangulation. The Microsoft Azure Kinect has been widely adopted by robotics researchers globally and is frequently mounted on robot heads or chests.

- Time-of-Flight Cameras (ToF Cameras): Similar to LiDAR, this camera projects modulated infrared light and measure the phase shift for each pixel. The key difference is that a ToF camera captures the entire scene's depth in a single "shot" like a photograph, rather than scanning point-by-point. Boston Dynamics' Atlas incorporates ToF sensors for quick, robust and accurate depth information, especially for tasks requiring spatial awareness of nearby obejcts.

- Stereo Vision Cameras: Mimicking human eyes, stereo camera uses two or more RGB cameras placed a set distance apart like a binocular. By analyzing the disparity (the difference in the position of objects between the two images), sophisticated algorithms calculate the depth of the scene.

The Gift of Multimodal Sight: Resilience and the Security Imperative

While the future of autonomous perception certainly lies in the intelligent fusion of sensors, it is vital to understand that technologies like Structured Light and Time-of-Flight cameras are not the direct, all-weather replacements for LiDAR; rather, they are complementary specialists:

LiDAR despite its higher cost and traditional mechanical complexity, remains superior for long-range and provides better performance in adverse weather compared to its active-sensing and short-range cousins. The challenge therefore, is not to pick a single winner, but to architect a system that cleverly leverages each sensor's strengths, while compensating for their weaknesses.

However, with this enhanced capability comes a critical challenge: security. The very act of fusing heterogeneous data streams creates a wider attack surface. An adversary is no longer limited to blinding a single camera or jamming a single LiDAR unit; they can now launch adversarial attacks that exploit the fusion process itself. By generating "phantom" objects in a LiDAR point cloud or subtly manipulating the image data from a camera in a way that remains semantically consistent, attackers can deceive the core perception system into a safety-critical failure, such as sudden braking or ignoring a real obstacle.

Therefore, true resilience in autonomy demands that development moves beyond simple data integration to focus on secure fusion architectures. The final, crucial step in this journey is embedding mechanisms—like cross-modal anomaly detection and secure synchronization—to ensure that the robot's sophisticated, multimodal perception is not just comprehensive, but also trustworthy and impervious to malicious manipulation.